This article delves into the world of web scraping HTML tables using JavaScript, exploring both basic techniques and advanced practices to help developers efficiently collect and process tabular data from web pages.

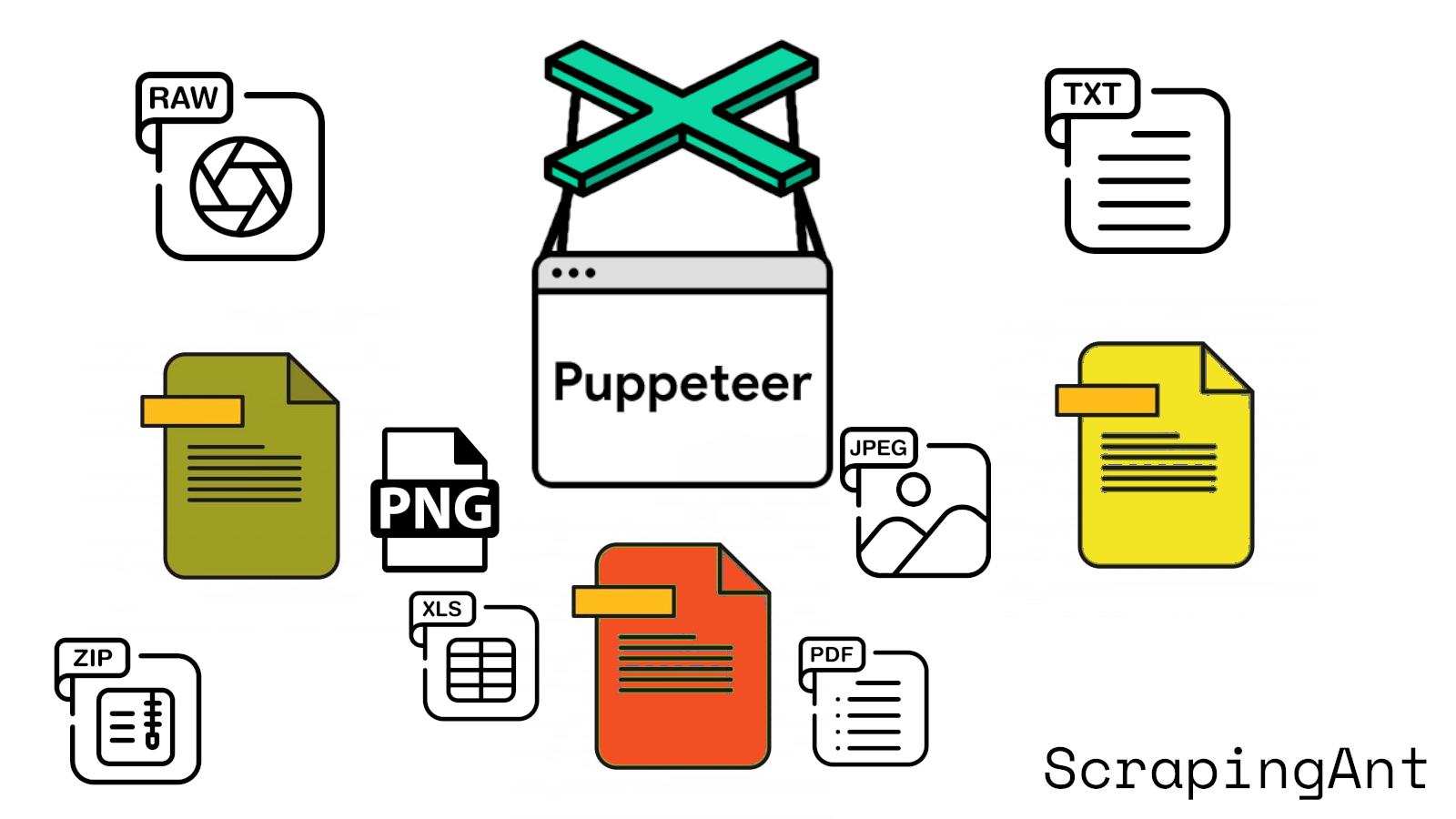

JavaScript, with its robust ecosystem of libraries and tools, offers powerful capabilities for web scraping. By leveraging popular libraries such as Axios for HTTP requests and Cheerio for HTML parsing, developers can create efficient and reliable scrapers (Axios documentation, Cheerio documentation). Additionally, tools like Puppeteer and Playwright enable the handling of dynamic content, making it possible to scrape even the most complex, JavaScript-rendered tables (Puppeteer documentation).

In this comprehensive guide, we'll walk through the process of setting up a scraping environment, implementing basic scraping techniques, and exploring advanced methods for handling dynamic content and complex table structures. We'll also discuss crucial ethical considerations to ensure responsible and lawful scraping practices. By the end of this article, you'll have a solid foundation in web scraping HTML tables with JavaScript, equipped with the knowledge to tackle a wide range of scraping challenges.