In the rapidly evolving landscape of web technologies, web scraping has emerged as a crucial tool for data extraction and analysis. As of 2024, two programming languages, JavaScript and Python, stand out as popular choices for developers engaging in web scraping tasks. Each language offers unique strengths and capabilities, making the decision between them a significant consideration for developers at all levels.

This article provides a comprehensive comparison of JavaScript and Python for web scraping in 2024, examining their respective advantages, limitations, and use cases. Whether you are a seasoned developer or just starting your journey in web scraping, understanding the nuances of these languages will empower you to make informed decisions tailored to your specific project needs.

Popularity and Community Support

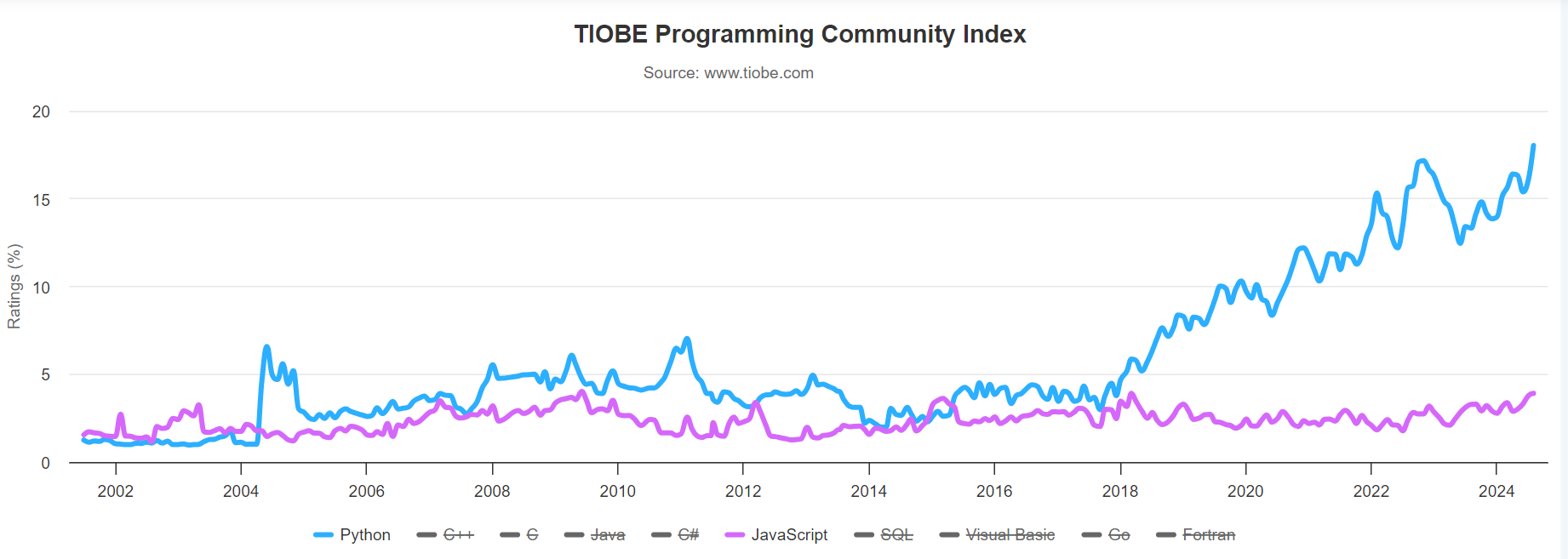

In 2024, both JavaScript and Python continue to be popular choices for web scraping, each with a strong community and extensive libraries. Python remains a favorite due to its simplicity and the powerful libraries like Beautiful Soup and Scrapy.

According to the TIOBE Index, Python has consistently ranked as one of the top programming languages, largely due to its versatility and ease of use. JavaScript, on the other hand, is the backbone of web development, and its use in web scraping has grown with the advent of Node.js and libraries like Puppeteer and Cheerio.

Libraries and Tools

Python offers a rich set of libraries for web scraping:

- BeautifulSoup: This library is known for its ease of use and ability to parse HTML and XML documents. It provides Pythonic idioms for iterating, searching, and modifying the parse tree (BeautifulSoup Documentation).

- Scrapy: A powerful and fast web scraping framework that allows developers to extract data from websites and process it as needed. Scrapy is particularly useful for large-scale scraping projects (Scrapy Documentation).

- Selenium: Although primarily used for web testing, Selenium is also popular for scraping dynamic content by automating browser actions (Selenium Documentation).

JavaScript has also developed robust tools for web scraping:

- Puppeteer: A Node.js library that provides a high-level API over the Chrome DevTools Protocol. It is particularly useful for scraping dynamic content and interacting with web pages (Puppeteer Documentation).

- Cheerio: A fast, flexible, and lean implementation of core jQuery designed specifically for the server. It is used for parsing and manipulating HTML (Cheerio Documentation).

- Axios: While not a scraping library per se, Axios is a promise-based HTTP client for the browser and Node.js, often used to fetch data from web pages (Axios Documentation).

Performance and Scalability

When it comes to performance and scalability, Python and JavaScript offer different strengths:

- Python: Python's libraries like Scrapy are optimized for performance and can handle large-scale scraping tasks efficiently. Scrapy's asynchronous capabilities allow it to manage multiple requests simultaneously, making it suitable for scraping large datasets.

- JavaScript: With Node.js, JavaScript can handle asynchronous operations efficiently, which is crucial for web scraping tasks that involve multiple requests. Puppeteer, in particular, can manage headless browser instances effectively, making it a strong choice for scraping dynamic content.

Handling Dynamic Content

Dynamic content, often loaded via JavaScript, poses a challenge for web scrapers. Both Python and JavaScript have tools to address this:

- Python: Selenium is the go-to tool for handling dynamic content in Python. It automates browser actions, allowing developers to interact with web pages as a user would. However, this can be resource-intensive and slower compared to headless solutions.

- JavaScript: Puppeteer excels in handling dynamic content by controlling a headless version of Chrome. It can execute JavaScript on the page, making it ideal for scraping content that loads dynamically (Puppeteer vs. Selenium).

Ease of Use and Learning Curve

The ease of use and learning curve can significantly impact a developer's choice between Python and JavaScript for web scraping:

- Python: Known for its readability and simplicity, Python is often recommended for beginners. The language's syntax is straightforward, and libraries like BeautifulSoup provide intuitive methods for parsing HTML.

- JavaScript: While JavaScript is essential for web development, its asynchronous nature and the complexity of Node.js can present a steeper learning curve for those new to programming. However, for developers already familiar with JavaScript, using libraries like Puppeteer can be straightforward.

Integration with Web Technologies

JavaScript: As the backbone of web development, JavaScript naturally integrates well with web technologies. Its ability to execute within the browser environment allows it to interact seamlessly with web pages. Tools like Puppeteer leverage this integration, providing a high-level API to control headless Chrome, making it ideal for scraping tasks that require interaction with JavaScript-heavy websites.

Python: Although not inherently a web language, Python's libraries like Selenium and Playwright enable it to interact with web technologies effectively. These tools can automate browser actions, allowing Python to handle dynamic content and JavaScript execution, albeit with potentially higher resource consumption compared to JavaScript's native capabilities. (Playwright vs. Selenium)

Data Extraction and Parsing Capabilities

JavaScript: Libraries like Cheerio provide a fast and flexible way to parse and manipulate HTML, mimicking jQuery's syntax. This makes it easy to extract data from static web pages. However, for more complex parsing tasks, JavaScript may require additional libraries or custom code to achieve the same level of functionality as Python.

Python: Known for its robust data extraction capabilities, Python offers libraries like BeautifulSoup and lxml that provide powerful tools for parsing HTML and XML documents. These libraries offer intuitive methods for navigating and searching the parse tree, making them highly effective for complex data extraction tasks.

Asynchronous Processing and Concurrency

JavaScript: Built on the Node.js runtime, JavaScript excels in asynchronous processing. Its non-blocking I/O model allows it to handle multiple requests concurrently, making it highly efficient for web scraping tasks that involve fetching data from multiple sources simultaneously. Libraries like Axios and native promises further enhance its asynchronous capabilities.

Python: Python's asynchronous capabilities have improved significantly with the introduction of asyncio and libraries like aiohttp. These tools enable Python to perform asynchronous HTTP requests and manage concurrency effectively. However, Python's Global Interpreter Lock (GIL) can sometimes limit its concurrency performance compared to JavaScript.

Error Handling and Debugging

JavaScript: JavaScript's asynchronous nature can complicate error handling, especially when dealing with promises and callbacks. However, modern JavaScript provides robust error handling mechanisms through try-catch blocks and the use of async/await syntax, which simplifies debugging asynchronous code. Tools like Chrome DevTools offer powerful debugging capabilities for JavaScript, allowing developers to inspect and manipulate the DOM in real-time.

Python: Python is renowned for its clear and informative error messages, which aid in debugging. The language's exception handling mechanism is straightforward, making it easy to catch and handle errors. Python's interactive shell and debugging tools like pdb provide a user-friendly environment for testing and debugging web scraping scripts.

Cross-Platform Compatibility

JavaScript: As a language that runs in the browser, JavaScript is inherently cross-platform. Node.js extends this compatibility to server-side applications, allowing JavaScript to run on any system that supports Node.js. This makes JavaScript a versatile choice for web scraping tasks that need to be deployed across various environments.

Python: Python is also highly cross-platform, with interpreters available for all major operating systems. Its extensive standard library and third-party packages are designed to work consistently across platforms. However, certain libraries, particularly those that interface with system-specific features, may require additional configuration to ensure compatibility.

Comparison Table

The following comparison table highlights key aspects of JavaScript and Python for web scraping in 2024:

| Feature/Aspect | JavaScript | Python |

|---|---|---|

| Ease of Use | Moderate - Requires understanding of async/await | High - Simple syntax and extensive documentation |

| Performance | High - Efficient with asynchronous operations | Moderate - Can be slower with synchronous code |

| Library Support | Strong - Puppeteer, Playwright | Extensive - Beautiful Soup, Scrapy, Selenium |

| Community Support | Large - Active web development community | Large - Strong data science and web scraping community |

| Handling JavaScript-Heavy Sites | Excellent - Native support through Node.js | Good - Requires additional tools like Selenium |

| Scalability | High - Suitable for large-scale scraping tasks | High - Well-suited for both small and large projects |

| Learning Curve | Steeper for beginners | Gentle - Ideal for beginners |

Conclusion

In conclusion, both JavaScript and Python offer powerful solutions for web scraping, each with distinct advantages. Python's simplicity, extensive library support, and scalability make it a preferred choice for many developers. JavaScript, with its native integration with web technologies and ability to handle dynamic content, provides a compelling alternative.

The choice between the two ultimately depends on the specific requirements of the scraping project, the developer's familiarity with the language, and the nature of the target websites.