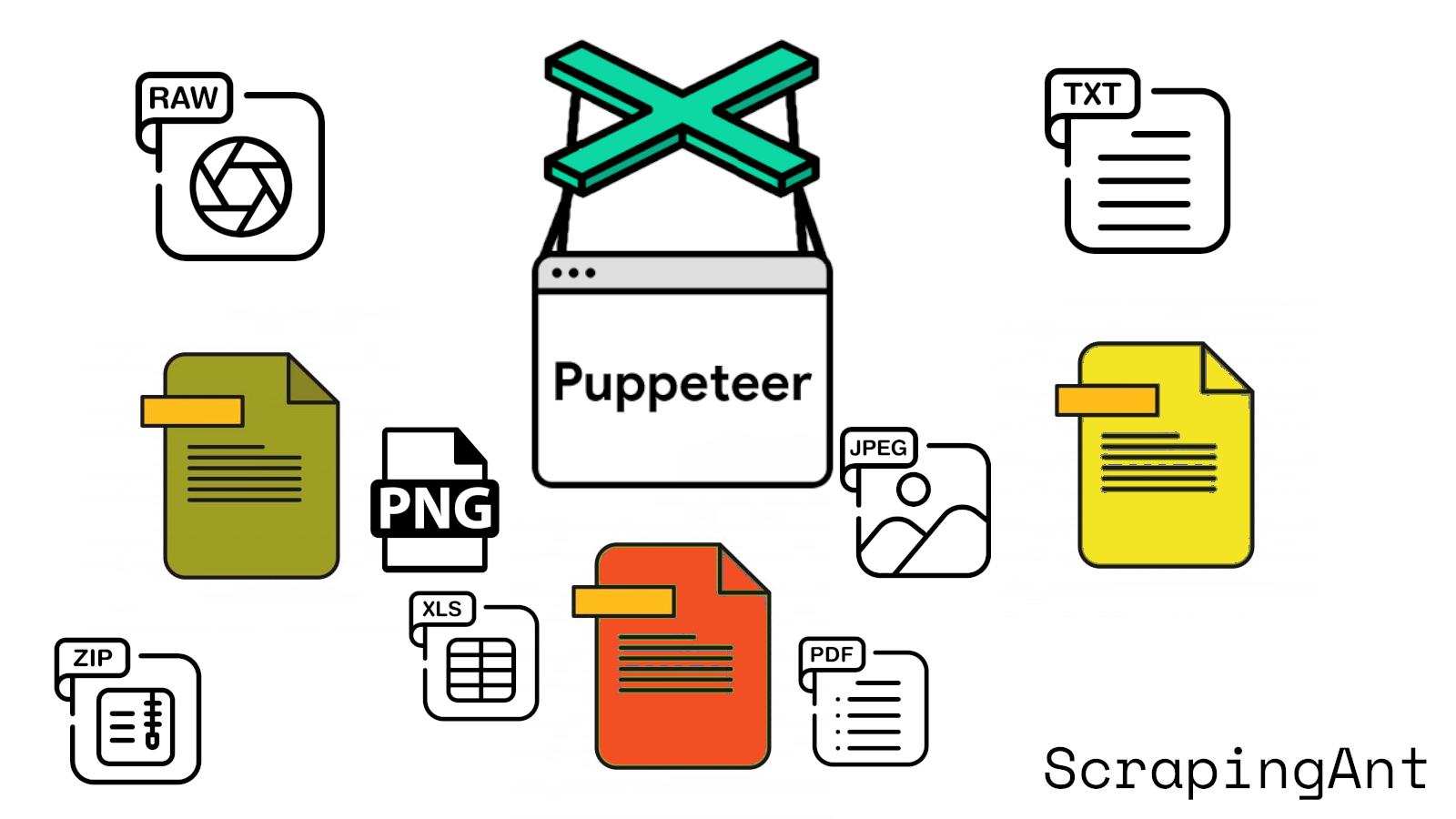

Puppeteer, a powerful Node.js library, allows developers to control Chrome or Chromium over the DevTools Protocol. Its high-level API facilitates a wide range of web automation tasks, including file downloads. This guide aims to provide a comprehensive overview of setting up Puppeteer for automated file downloads, using various methods and best practices to ensure efficiency and reliability. Whether you're scraping data, automating repetitive tasks, or handling protected content, Puppeteer offers robust tools to streamline the process.

To get started with Puppeteer, you'll need Node.js installed on your machine and a basic understanding of JavaScript and Node.js. Once installed, Puppeteer provides several ways to download files, including using the browser's fetch feature, simulating user interaction, leveraging the Chrome DevTools Protocol (CDP), and combining Puppeteer with HTTP clients like Axios. Each method has its unique advantages and is suited for different use cases.

Throughout this guide, we'll explore detailed steps for configuring Puppeteer for file downloads, handling various file types and MIME types, managing download timeouts, and implementing error handling. Additionally, we'll cover advanced topics such as handling authentication, managing dynamic content, and monitoring download progress. By following these best practices and considerations, you can create robust and efficient file download scripts using Puppeteer.

For more detailed code examples and explanations, you can refer to the Puppeteer API documentation and other relevant resources mentioned throughout this guide.

This guide is a part of the series on web scraping and file downloading with different web drivers and programming languages. Check out the other articles in the series:

- How to download a file with Selenium in Python?

- How to download a file with Puppeteer?

- How to download a file with Playwright?

Setting Up Puppeteer for File Downloads

Introduction

Puppeteer is a powerful Node.js library that provides a high-level API to control Chrome or Chromium over the DevTools Protocol. This guide will walk you through the process of setting up Puppeteer for automated file downloads. By the end of this article, you'll be able to configure Puppeteer to download files seamlessly from any website.

Prerequisites

Before we dive into the setup, make sure you have the following prerequisites:

- Node.js installed on your machine

- Basic understanding of JavaScript and Node.js

Installation

To begin using Puppeteer for file downloads, the first step is to install it in your project. Use the following command in your terminal:

npm install puppeteer

This command will install the latest version of Puppeteer along with a compatible version of Chromium. For more information, visit the Puppeteer npm page.

Project Configuration

After installation, set up your project by creating a new JavaScript file (e.g., download.js). Import Puppeteer at the top of this file:

const puppeteer = require('puppeteer');

Browser Launch Configuration

When setting up Puppeteer for file downloads, it's essential to configure the browser launch options correctly. Here’s how to launch a browser with the necessary settings:

const browser = await puppeteer.launch({

headless: false,

defaultViewport: null,

args: ['--start-maximized']

});

headless: falselaunches the browser in non-headless mode.defaultViewport: nullensures the browser window size is not constrained.args: ['--start-maximized']starts the browser in a maximized window.

Page Creation and Configuration

Create a new page and configure it for downloads:

const page = await browser.newPage();

await page._client.send('Page.setDownloadBehavior', {

behavior: 'allow',

downloadPath: '/path/to/download/directory'

});

This tells Puppeteer to allow downloads and specifies the directory where files should be saved. Refer to the Puppeteer API documentation for more details.

Setting Download Preferences

To ensure smooth file downloads, set the correct preferences:

await page._client.send('Page.setDownloadBehavior', {

behavior: 'allow',

downloadPath: '/path/to/download/directory'

});

Replace /path/to/download/directory with the actual path where you want the files to be saved.

Handling Download Events

Puppeteer does not provide direct methods to track download progress or completion. Use a combination of page events and file system monitoring:

const fs = require('fs');

const path = require('path');

page.on('response', async (response) => {

const contentDisposition = response.headers()['content-disposition'];

if (contentDisposition && contentDisposition.includes('attachment')) {

const filename = contentDisposition.split('filename=')[1].replace(/"/g, '');

const downloadPath = path.join('/path/to/download/directory', filename);

await new Promise((resolve) => {

const checkFile = setInterval(() => {

if (fs.existsSync(downloadPath) && fs.statSync(downloadPath).size > 0) {

clearInterval(checkFile);

resolve();

}

}, 100);

});

console.log(`File downloaded: ${filename}`);

}

});

This code listens for response events, identifies download responses, and waits for the file to be fully downloaded before logging a message. Refer to the Node.js File System documentation for more information.

Error Handling

Implement robust error handling to gracefully handle issues during the download process:

try {

// Your download code here

} catch (error) {

console.error('An error occurred during the download:', error);

// Implement appropriate error handling logic

} finally {

await browser.close();

}

This structure catches and logs any errors that occur during the download process and ensures the browser is closed regardless of the outcome.

Timeout Configuration

Set appropriate timeouts to prevent your script from hanging indefinitely:

page.setDefaultNavigationTimeout(60000); // 60 seconds

page.setDefaultTimeout(30000); // 30 seconds

These settings define the maximum time Puppeteer will wait for navigation and other operations before throwing a timeout error. More details can be found in the Puppeteer Timeout documentation.

User Agent Configuration

Some websites may block or behave differently with automated browsers. Set a realistic user agent to mimic a regular browser:

await page.setUserAgent('Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36');

This can help avoid detection as an automated tool. Refer to the MDN User-Agent documentation for more details.

Handling Authentication

If the file you’re trying to download is behind a login, handle authentication as follows:

await page.goto('https://example.com/login');

await page.type('#username', 'your_username');

await page.type('#password', 'your_password');

await page.click('#login-button');

await page.waitForNavigation();

Adjust the selectors (#username, #password, #login-button) according to the specific website you’re working with.

Handling Captchas

Implement strategies to handle CAPTCHAs:

- Use a CAPTCHA solving service (e.g., 2captcha, Anti-Captcha).

- Implement manual solving by pausing the script and allowing human intervention.

- Look for alternative APIs or methods provided by the website that don’t require CAPTCHA solving.

Here’s a basic example of pausing for manual CAPTCHA solving:

await page.goto('https://example.com/download-page');

if (await page.$('#captcha-element')) {

console.log('CAPTCHA detected. Please solve it manually.');

await page.waitForSelector('#captcha-element', { hidden: true, timeout: 300000 }); // Wait up to 5 minutes

}

This code checks for a CAPTCHA element and waits for it to disappear, assuming it’s been solved manually.

Handling Dynamic Content

Wait for specific conditions to ensure Puppeteer can interact with dynamically loaded content:

await page.waitForSelector('#download-button');

await page.click('#download-button');

This code waits for a download button to appear before clicking it. More details can be found in the Puppeteer waitForSelector documentation.

Monitoring Network Traffic

Monitor network traffic to gain more insight into the download process:

await page.setRequestInterception(true);

page.on('request', (request) => {

console.log('Request URL:', request.url());

request.continue();

});

This code logs all outgoing requests, which can be helpful for debugging or identifying the exact URL of the file you’re trying to download. Refer to the Puppeteer Network Monitoring documentation for more information.

Conclusion

By following these detailed steps and considerations, you can effectively set up Puppeteer for downloading files. Remember to adjust the code examples to fit your specific use case and the website you’re interacting with. Always respect website terms of service and implement appropriate error handling and timeouts to ensure robust performance of your file download script.

Methods for Downloading Files with Puppeteer

Direct Download Using Browser's Fetch Feature

One of the primary methods for downloading files with Puppeteer involves utilizing the browser's built-in fetch functionality. This approach allows developers to capture the file content directly in a JavaScript variable, providing greater control over the downloaded data. This method is particularly useful when dealing with files that are not directly linked to download buttons or when you need to process the file content before saving.

Implementation Steps:

Navigate to the target page: Use Puppeteer to open the webpage containing the file to be downloaded.

Locate the file URL: Identify the URL of the file you wish to download. This can often be found in the

hrefattribute of a link or thesrcattribute of a resource.Use page.evaluate(): Employ Puppeteer's

page.evaluate()method to execute client-side JavaScript that fetches the file.Fetch the file: Within the

page.evaluate()function, use thefetch()API to retrieve the file content.Convert to Base64: Convert the fetched file to a Base64-encoded string for easy handling.

Return the data: Pass the Base64 string back to the Node.js environment.

Decode and save: In Node.js, decode the Base64 string and save it as a file.

Example code snippet:

const puppeteer = require('puppeteer');

const fs = require('fs');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://example.com/file.pdf');

const fileUrl = 'https://example.com/file.pdf';

const base64Data = await page.evaluate(async (url) => {

const response = await fetch(url);

const blob = await response.blob();

return new Promise((resolve) => {

const reader = new FileReader();

reader.onloadend = () => resolve(reader.result.split(',')[1]);

reader.readAsDataURL(blob);

});

}, fileUrl);

const buffer = Buffer.from(base64Data, 'base64');

fs.writeFileSync('downloaded_file.pdf', buffer);

await browser.close();

})();

Simulating User Interaction for Download

Simulating user interaction is a common approach to trigger file downloads, mimicking the process a human user would follow. This method is ideal for websites that use standard download mechanisms and when you want to save files directly to a specified directory.

Key Steps:

Configure download behavior: Set up Puppeteer to handle downloads automatically.

Navigate to the page: Load the webpage containing the download link or button.

Locate the download element: Use Puppeteer's selectors to find the download button or link.

Trigger the download: Simulate a click on the download element.

Wait for download: Implement a waiting mechanism to ensure the file is completely downloaded.

Example implementation:

const puppeteer = require('puppeteer');

const path = require('path');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

// Set download behavior

await page._client.send('Page.setDownloadBehavior', {

behavior: 'allow',

downloadPath: path.resolve(__dirname, 'downloads')

});

await page.goto('https://example.com/download-page');

// Click the download button

await page.click('#download-button');

// Wait for download to complete

await page.waitForSelector('#download-complete-indicator', { timeout: 60000 });

await browser.close();

})();

Using Chrome DevTools Protocol (CDP)

For more advanced control over the download process, developers can leverage the Chrome DevTools Protocol (CDP) through Puppeteer. This method provides the most flexibility and control over the download process, making it suitable for complex scraping scenarios.

Advantages of CDP:

- Fine-grained control over browser behavior

- Ability to customize download paths

- Enhanced monitoring of download progress

Implementation:

Enable CDP: Connect to the Chrome DevTools Protocol.

Configure download settings: Use CDP commands to set download behavior and paths.

Monitor download progress: Utilize CDP events to track file downloads.

Example code:

const puppeteer = require('puppeteer');

const path = require('path');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

// Enable CDP

const client = await page.target().createCDPSession();

// Set download behavior

await client.send('Page.setDownloadBehavior', {

behavior: 'allow',

downloadPath: path.resolve(__dirname, 'downloads')

});

await page.goto('https://example.com/download-page');

// Click download button

await page.click('#download-button');

// Monitor download progress

client.on('Page.downloadProgress', (event) => {

if (event.state === 'completed') {

console.log('Download completed');

}

});

// Wait for some time to ensure download starts

await page.waitForTimeout(5000);

await browser.close();

})();

Combining Puppeteer with HTTP Clients

For scenarios where direct downloading through Puppeteer is challenging, combining Puppeteer with dedicated HTTP clients like Axios can be an effective solution. This approach is particularly useful for bypassing complex JavaScript-based download triggers, handling large numbers of files efficiently, and overcoming certain anti-bot measures.

Process Overview:

- Use Puppeteer to navigate and extract file URLs.

- Employ an HTTP client (e.g., Axios) to download the files.

Example implementation:

const puppeteer = require('puppeteer');

const axios = require('axios');

const fs = require('fs');

const path = require('path');

async function downloadFile(url, downloadPath) {

const response = await axios({

method: 'GET',

url: url,

responseType: 'stream'

});

return new Promise((resolve, reject) => {

const writer = fs.createWriteStream(downloadPath);

response.data.pipe(writer);

writer.on('finish', resolve);

writer.on('error', reject);

});

}

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://example.com/file-list');

// Extract file URLs

const fileUrls = await page.evaluate(() => {

return Array.from(document.querySelectorAll('a[href$=".pdf"]'))

.map(a => a.href);

});

// Download files using Axios

for (let url of fileUrls) {

const fileName = path.basename(url);

const downloadPath = path.resolve(__dirname, 'downloads', fileName);

await downloadFile(url, downloadPath);

console.log(`Downloaded: ${url}`);

}

await browser.close();

})();

By understanding and applying these various methods, developers can effectively handle a wide range of file download scenarios using Puppeteer, enhancing their web scraping and automation capabilities.

Practical Use Cases

- Direct Download Using Fetch: Ideal for processing file content before saving or downloading files not directly linked to buttons.

- Simulating User Interaction: Best for standard download mechanisms and directly saving files to a specified directory.

- Using CDP: Provides advanced, fine-grained control over downloads, suitable for complex scenarios.

- Combining with HTTP Clients: Useful for bypassing complex triggers, handling multiple files, and overcoming anti-bot measures.

Conclusion

In summary, Puppeteer offers several robust methods for downloading files, each with unique advantages and use cases. By leveraging direct downloads with the Fetch API, simulating user interactions, utilizing the Chrome DevTools Protocol, or combining Puppeteer with HTTP clients like Axios, developers can tailor their approach to suit a variety of web scraping and automation needs.

Best Practices and Considerations for Downloading Files with Puppeteer

Introduction

Downloading files using Puppeteer is an essential task for web automation. Whether you need to scrape data, automate repetitive download tasks, or handle protected content, Puppeteer offers various tools to streamline the process. This guide covers the best practices and considerations to ensure efficient and reliable file downloads with Puppeteer. Key topics include setting up the download directory, handling file types, managing timeouts, and more.

Setting Up the Download Directory

When downloading files with Puppeteer, it's crucial to set up a dedicated download directory. This practice ensures that downloaded files are organized and easily accessible. To configure the download directory:

- Create a specific folder for downloads.

- Use the

--download-pathoption when launching the browser.

const browser = await puppeteer.launch({

args: [`--download-path=${downloadPath}`]

});

This approach helps maintain a clean workspace and simplifies file management (Puppeteer GitHub).

Handling File Types and MIME Types

Different file types may require specific handling. Puppeteer allows you to set up custom behaviors based on MIME types:

- Use the

page.setRequestInterception(true)method to intercept requests. - Implement a request handler to modify headers or change the response for specific MIME types.

await page.setRequestInterception(true);

page.on('request', (request) => {

if (request.resourceType() === 'document') {

request.continue({

headers: {

...request.headers(),

'Content-Type': 'application/octet-stream'

}

});

} else {

request.continue();

}

});

This technique is particularly useful for forcing downloads of files that might otherwise open in the browser (Puppeteer API Documentation).

Waiting for Downloads to Complete

Ensuring that downloads are complete before proceeding with other actions is crucial. Puppeteer provides several methods to achieve this:

- Use the

page.waitForSelector()method to wait for a download confirmation element. - Implement a custom wait function that checks the download directory for file completion.

const waitForDownload = async (page, timeout = 60000) => {

await page.waitForSelector('#download-complete', { timeout });

};

This approach prevents premature script termination and ensures file integrity (Puppeteer Wait Documentation).

Handling Authentication for Protected Downloads

When dealing with downloads that require authentication:

- Use Puppeteer's

page.authenticate()method to set credentials. - Implement cookie-based authentication by setting cookies before navigating to the download page.

await page.authenticate({username: 'user', password: 'pass'});

// or

await page.setCookie(...authCookies);

This ensures that the browser session has the necessary permissions to access and download protected files (Puppeteer Authentication Guide).

Managing Download Timeouts

To prevent indefinite waiting for downloads:

- Set a reasonable timeout for download operations.

- Implement error handling for timeout scenarios.

const downloadTimeout = 120000; // 2 minutes

try {

await Promise.race([

waitForDownload(page),

new Promise((_, reject) => setTimeout(() => reject(new Error('Download timeout')), downloadTimeout))

]);

} catch (error) {

console.error('Download failed:', error.message);

}

This practice ensures that your script doesn't hang indefinitely on slow or failed downloads (JavaScript Promise Documentation).

Handling Dynamic Download Buttons

Many websites use dynamic elements for initiating downloads. To handle these:

- Use Puppeteer's

page.waitForSelector()to ensure the download button is visible and clickable. - Implement retry logic for intermittent failures.

const clickDownloadButton = async (page, selector, maxRetries = 3) => {

for (let i = 0; i < maxRetries; i++) {

try {

await page.waitForSelector(selector, { visible: true, timeout: 5000 });

await page.click(selector);

return;

} catch (error) {

if (i === maxRetries - 1) throw error;

await page.waitForTimeout(1000);

}

}

};

This approach improves the reliability of your download scripts, especially on dynamic websites (Puppeteer Click Documentation).

Monitoring Download Progress

For large files or multiple downloads, implementing progress monitoring can be beneficial:

- Use the

fs.watch()method to monitor the download directory for file changes. - Implement a progress bar or logging mechanism to track download status.

const fs = require('fs');

const monitorDownloadProgress = (downloadPath) => {

fs.watch(downloadPath, (eventType, filename) => {

if (eventType === 'change' && filename) {

const stats = fs.statSync(`${downloadPath}/${filename}`);

console.log(`Downloading ${filename}: ${stats.size} bytes`);

}

});

};

This feature provides valuable feedback during long-running download operations (Node.js File System Documentation).

Handling Network Errors and Retries

Network issues can disrupt downloads. Implement robust error handling and retry mechanisms:

- Catch network-related errors using try-catch blocks.

- Implement an exponential backoff strategy for retries.

const downloadWithRetry = async (page, url, maxRetries = 3) => {

for (let i = 0; i < maxRetries; i++) {

try {

await page.goto(url);

await clickDownloadButton(page, '#download-btn');

return;

} catch (error) {

console.error(`Download attempt ${i + 1} failed:`, error.message);

if (i === maxRetries - 1) throw error;

await page.waitForTimeout(Math.pow(2, i) * 1000);

}

}

};

This approach increases the resilience of your download scripts against temporary network issues (Exponential Backoff Algorithm).

Verifying Downloaded Files

After downloading, it's important to verify the integrity and completeness of the files:

- Check file size against expected size (if known).

- Implement checksum verification for critical downloads.

const crypto = require('crypto');

const fs = require('fs');

const verifyFileChecksum = (filePath, expectedChecksum) => {

return new Promise((resolve, reject) => {

const hash = crypto.createHash('sha256');

const stream = fs.createReadStream(filePath);

stream.on('data', (data) => hash.update(data));

stream.on('end', () => {

const fileChecksum = hash.digest('hex');

resolve(fileChecksum === expectedChecksum);

});

stream.on('error', reject);

});

};

This practice ensures that downloaded files are complete and unaltered (Node.js Crypto Documentation).

Cleaning Up Temporary Files

Maintain a clean workspace by removing temporary files after successful downloads:

- Implement a cleanup function to remove partial downloads or temporary files.

- Schedule cleanup operations after each download or at the end of the script.

const cleanupDownloads = (downloadPath, pattern) => {

const files = fs.readdirSync(downloadPath);

files.forEach((file) => {

if (file.match(pattern)) {

fs.unlinkSync(`${downloadPath}/${file}`);

}

});

};

This practice prevents accumulation of unnecessary files and maintains disk space efficiency (Node.js File System Unlink Documentation).

Conclusion

By following these best practices and considerations, you can create robust and efficient file download scripts using Puppeteer. These techniques address common challenges in file downloading, ensuring reliability, security, and performance in your automation tasks. For further reading, consider exploring additional Puppeteer documentation and related topics on web automation.

Conclusion

In conclusion, Puppeteer offers a versatile and powerful framework for automating file downloads from the web. By leveraging its high-level API, developers can handle a wide range of scenarios, from simple direct downloads to more complex tasks involving dynamic content and authentication. The methods and best practices outlined in this guide, including the use of the browser's fetch feature, simulating user interactions, utilizing the Chrome DevTools Protocol, and combining Puppeteer with HTTP clients, provide a comprehensive toolkit for efficient and reliable file downloads.

It is essential to set up a dedicated download directory, handle various file types and MIME types, and manage download timeouts effectively. Additionally, implementing robust error handling, retry mechanisms, and verifying downloaded files ensure the reliability and integrity of your automation scripts. Monitoring download progress and cleaning up temporary files further enhance the efficiency of your workflow.

By following the guidelines and examples provided, you can harness the full potential of Puppeteer for your web automation and scraping needs. For further reading and advanced techniques, consider exploring additional Puppeteer documentation and related topics on web automation.

For more information, you can refer to the Puppeteer GitHub repository and related resources.