Java is one of the most popular and high demanded programming languages nowadays. It allows creating highly-scalable and reliable services as well as multi-threaded data extraction solutions. Let's check out the main concepts of web scraping with Java and review the most popular libraries to setup your data extraction flow.

In this article, we're going to explore different aspects of Java web scraping: retrieving data using HTTP/HTTPS call, parsing HTML data, and running a headless browser to render Javascript and avoid getting blocked. All those parts are essential, as not every website provides an API to access their data.

How to create a web scraper with Java?

To create a complete web scraper, you should consider covering a group of the following features:

- data extraction (retrieve required data from the website)

- data parsing (pick only the required information)

- data storing/presenting

Let's create a simple Java web scraper, which will get the title text from the site example.com to observe how to cover each aspect on practice:

package com.example.scraper;

import java.io.IOException;

import java.util.regex.Matcher;

import java.util.regex.Pattern;

import okhttp3.OkHttpClient;

import okhttp3.Request;

import okhttp3.Response;

public class ExampleScraper {

public static void main(String[] args) throws IOException {

final ExampleScraper scraper = new ExampleScraper();

final String htmlContent = scraper.getContent();

final String extractedTitle = scraper.extractTitle(htmlContent);

System.out.println(extractedTitle);

}

private String getContent() throws IOException {

final OkHttpClient client = new OkHttpClient.Builder().build();

final String urlToScrape = "https://example.com";

final Request request = new Request.Builder().url(urlToScrape).build();

final Response response = client.newCall(request).execute();

return response.body().string();

}

private String extractTitle(String content) {

final Pattern titleRegExp = Pattern.compile("<title>(.*?)</title>", Pattern.DOTALL);

final Matcher matcher = titleRegExp.matcher(content);

matcher.find();

return matcher.group(1);

}

}

Each of the steps described above is called separately from the main function:

Getting data from the server

Our scraper data retrieving part is performed by the function getContent:

private String getContent() throws IOException {

final OkHttpClient client = new OkHttpClient.Builder().build();

final String urlToScrape = "https://example.com";

final Request request = new Request.Builder().url(urlToScrape).build();

final Response response = client.newCall(request).execute();

return response.body().string();

}

OkHttpClient library (we'll review it a bit later) provides us the ability to make an HTTP call to get the information from the web server that hosts example.com content.

After receiving the response (using client.newCall), we can get the response body containing the page's HTML.

Extracting data from the HTML

The example.com HTML content is full of HTML/CSS information, which is not what we're targetting to get, so we need to extract exact text data (in our example, it is a page's title).

private String extractTitle(String content) {

final Pattern titleRegExp = Pattern.compile("<title>(.*?)</title>", Pattern.DOTALL);

final Matcher matcher = titleRegExp.matcher(content);

matcher.find();

return matcher.group(1);

}

To get this job done, we're using RegExp (regular expression). It's not the most effortless way of text data extraction, as some developers might not be too familiar with the regular expression rules. Still, the benefit of using it is in the ability not to use third-party dependencies.

As usual, we'll discover a more comfortable way to deal with HTML parsing a bit later in this article.

Presenting data using console

And the last but not least part of our simple web scraper is data presenting to the end-user:

System.out.println(extractedTitle);

Not the most impressive part of the program, but this abstraction is required to use web scraping results. You can replace this part with an API call response, DB storing function, or displaying the data in UI.

Let's move forward and check out valuable tools that cover data extraction needs.

How to make a request: HTTP client libraries

Making an HTTP request is a basis for most web scrapers, as the website's data is served using this commonly spread protocol. Sometimes HTTP client may be the only library needed for the web scraper, as it can cover requesting and receiving HTML content from the server.

We will check out several most common libraries with examples of making requests using them.

HttpURLConnection / HttpsURLConnection

HttpURLConnection is the maturest of the clients we're comparing, and probably the most used in the Java ecosystem launched way back in version 1.1 of the JDK. It's also HTTPS supporting counterpart HttpsURLConnection was introduced in Java 1.4.

The main advantage of using those classes is that they will be available in any version of Java you're using.

The following code snippet shows how to get the example.com HTML content:

package com.example.scraper;

import java.net.MalformedURLException;

import java.net.URL;

import java.io.*;

import javax.net.ssl.HttpsURLConnection;

public class ExampleScraper {

public static void main(String[] args) {

new ExampleScraper().scrape();

}

private void scrape() {

final String httpsUrl = "https://example.com/";

try {

final URL url = new URL(httpsUrl);

final HttpsURLConnection con = (HttpsURLConnection) url.openConnection();

System.out.println("****** Content of the URL ********");

final BufferedReader br = new BufferedReader(new InputStreamReader(con.getInputStream()));

String input;

while ((input = br.readLine()) != null){

System.out.println(input);

}

br.close();

} catch (MalformedURLException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

}

}

With the expected output:

****** Content of the URL ********

<!doctype html>

<html>

<head>

<title>Example Domain</title>

....

So, if it works, why do we need to consider any alternatives? So, the reasons are following:

- only a synchronous/blocking API

- no HTTP/2 support

- no compression support

- no forms support

- lack of features like the described above

I'd recommend avoiding using this library if you can use any third-party alternative.

HttpURLConnection documentation

OkHttpClient

The next option in my list (and I've found this as the handiest for me because of Android development experience) is OkHttpClient by Square.

It's full of features like native support for HTTP/2 and TLS1.3+, the ability to failover between multiple IP addresses, content compression by Deflate, GZip, and Brotli. Also, the capacity to recover from failed connection attempts won't let you avoid this library.

It can be added as a dependency using Maven:

<dependency>

<groupId>com.squareup.okhttp3</groupId>

<artifactId>okhttp</artifactId>

<version>4.9.1</version>

</dependency>

Let's rewrite our example.com scraper using OkHttpClient:

package com.example.scraper;

import okhttp3.OkHttpClient;

import okhttp3.Request;

import okhttp3.Response;

public class ExampleScraper {

public static void main(String[] args) {

new ExampleScraper().scrape();

}

private void scrape() {

final String httpsUrl = "https://example.com/";

try {

final OkHttpClient client = new OkHttpClient.Builder().build();

final Request request = new Request.Builder()

.url(httpsUrl)

.build();

final Response response = client.newCall(request).execute();

System.out.println("****** Content of the URL ********");

System.out.println(response.body().string());

} catch (IOException e) {

e.printStackTrace();

}

}

}

With the more laconic way, we've received the same result.

What can be said not from the positive perspective about OkHttpClient?

Since version 4 OkHttp has been written in Kotlin, so some developers find it hard to debug (who is not familiar with Kotlin). Also, the Kotlin standard library is pulled in as a transitive dependency.

Jetty HttpClient

Jetty HttpClient is another great modern alternative to the standard HTTP client tool. It uses entirely non-blocking code inside and provides both synchronous and asynchronous APIs. HTTP/2 support is presented but as an additional library inclusion.

To add it as a Maven dependency just add the following node into the pom.xml

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-client</artifactId>

<version>11.0.4</version>

</dependency>

By the local tradition, let's write the HTML code scraper for example.com using Jetty HttpClient:

package com.example.scraper;

import org.eclipse.jetty.client.HttpClient;

import org.eclipse.jetty.client.api.ContentResponse;

public class ExampleScraper {

public static void main(String[] args) {

new ExampleScraper().scrape();

}

private void scrape() {

final String httpsUrl = "https://example.com/";

try {

final HttpClient client = new HttpClient();

client.start();

final ContentResponse res = client.GET(httpsUrl);

System.out.println("****** Content of the URL ********");

System.out.println(res.getContentAsString());

client.stop();

} catch (Exception e) {

e.printStackTrace();

}

}

}

The Jetty client supports HTTP/2 and is very configurable, which makes it a good alternative to OkHttpClient. The simplicity of usage and maintaining activity is excellent, so it can be the right choice to use this library.

Jetty HttpClient documentation

Parsing HTML

To get meaningful information from the bunch of HTML tags, we need to make an HTML data extraction (also known as HTML parsing).

Extracted data can be a text, image, video, URL, file, etc. In our simple parser, we've used a RegExp, but it's not the best way to deal with HTML, as the complexity of such a solution will grow with each new data unit to parse.

Still, several great libraries would simplify the data extraction flow.

We're going to use the title extraction method from the first part of this article to compare simplicity with HTML parsing libraries:

private String extractTitle(String content) {

final Pattern titleRegExp = Pattern.compile("<title>(.*?)</title>", Pattern.DOTALL);

final Matcher matcher = titleRegExp.matcher(content);

matcher.find();

return matcher.group(1);

}

Let's rewrite our simple scrape's title extractor with each of them:

jsoup: Java HTML Parser

jsoup is a Java-based library that provides a very convenient API to extract and manipulate data, using the best of DOM, CSS, and jquery-like methods.

You can use URL, file, or string as an input. Also, jsoup team claims that it handles old and lousy HTML while supporting HTML5 standards. Easy use of CSS selectors and DOM traversal makes this library one of my favorites.

First, you need to add the Maven dependency:

<dependency>

<groupId>org.jsoup</groupId>

<artifactId>jsoup</artifactId>

<version>1.13.1</version>

</dependency>

Our parsing method with jsoup will have a following look:

private String extractTitle(String content) {

final Document doc = Jsoup.parse(content);

final Element titleElement = doc.select("title").first();

return titleElement.text();

}

The basic concept behind these lines is to load the HTML content inside jsoup using Jsoup.parse method. It would allow us to find, access, and manipulate DOM elements. Then we apply a CSS selector for tag title and get the first one.

jsoup Cookbook (documentation)

HTMLCleaner: transform HTML to well-formed XML

The next candidate for our HTML parsing function is HTMLCleaner.

It's also is one of the popular libraries for HTML manipulations and DOM traversal. The library itself is old but still maintained, so it wouldn't be a problem to get a bug fix or update. Still, the architecture and library's API are a bit old and can be clunky when you need to manipulate HTML.

To install it as a dependency:

<dependency>

<groupId>net.sourceforge.htmlcleaner</groupId>

<artifactId>htmlcleaner</artifactId>

<version>2.24</version>

</dependency>

As usual, let's check out how to use it in our simple web scraper:

private String extractTitle(String content) {

final HtmlCleaner cleaner = new HtmlCleaner();

final TagNode node = cleaner.clean(content);

final TagNode titleNode = node.findElementByName("title", true);

return titleNode.getText().toString();

}

My impression about HTMLCleaner is that this library has the HTML parsing approach, which is not widely used across the web scraping community, and it might be confusing. Also, the lack of documentation makes it hard to find out relevant information.

Headless browsers: how to scrape a dynamic website with Java

Well, we've reached the most exciting part of this article: headless browsers.

Modern websites tend to use SPA (Single Page Application) technology to serve content, which means that it's not enough to just make an HTTP request to a server for receiving content in your web scraper. It also requires executing the web page's Javascript code which dynamically loads data. Actually, it happens and supports by all modern browsers, so you even may not notice such behavior during web surfing.

Also, the website can use the simpler technology of dynamic content load - XHR. It provides a better user experience for end-users but makes it harder to extract data from such web pages.

What is the most straightforward way of overcoming it? Sure, let's use a browser (and pretend to be a real user)!

Simple dynamic website

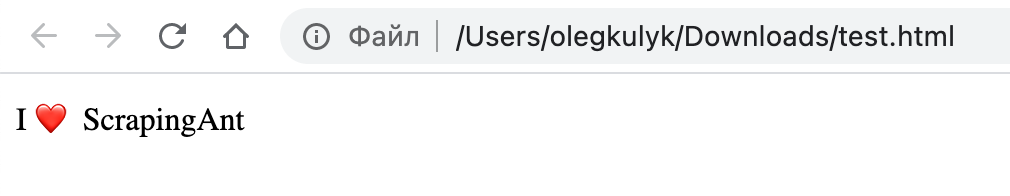

For demonstration purposes, I've created a simple dynamic website with the following content (source code can be found here: https://github.com/kami4ka/dynamic-website-example/blob/main/index.html):

<html>

<head>

<title>Dynamic Web Page Example</title>

<script>

window.addEventListener("DOMContentLoaded", function() {

document.getElementById("test").innerHTML="I ❤️ ScrapingAnt"

}, false);

</script>

</head>

<body>

<div id="test">Web Scraping is hard</div>

</body>

</html>

As we can observe, it has a div with the following text inside: Web Scraping is hard.

Still, when you open it in your browser, this text will be different because of the internal Javascript function:

<script>

window.addEventListener("DOMContentLoaded", function() {

document.getElementById("test").innerHTML="I ❤️ ScrapingAnt"

}, false);

</script>

You can try it by visiting: https://kami4ka.github.io/dynamic-website-example/

Our next step will be to try out scraping this page with our headless browsers.

HtmlUnit: headless web browser written in Java

HtmlUnit is a "GUI-Less browser for Java programs". It means that this library constantly tries to support and cover all the possible features of modern browsers to have the ability of proper web scraping and UI/End-To-End testing.

To install it as a dependency you have to add the following lines:

<dependency>

<groupId>net.sourceforge.htmlunit</groupId>

<artifactId>htmlunit</artifactId>

<version>2.50.0</version>

</dependency>

HtmlUnit requires creating a WebClient to make a request. Also, it allows us to enable or disable Javascript execution, so we can observe both behaviors while scraping our simple dynamic page.

At first, I'll disable Javascript:

import com.gargoylesoftware.htmlunit.WebClient;

import com.gargoylesoftware.htmlunit.html.HtmlPage;

public class ExampleDynamicScraper {

public static void main(String[] args) {

try {

final WebClient client = new WebClient();

client.getOptions().setCssEnabled(false);

client.getOptions().setJavaScriptEnabled(false);

final HtmlPage page = client.getPage("https://kami4ka.github.io/dynamic-website-example/");

System.out.println(page.asNormalizedText());

} catch(Exception e) {

e.printStackTrace();

}

}

}

asNormalizedText function helps us to observe a visual representation of the page.

As you've probably figured, the output will be the following:

Dynamic Web Page Example

Web Scraping is hard

Still, with enabled Javascript (client.getOptions().setJavaScriptEnabled(true)) we'll observe another result:

Dynamic Web Page Example

I ❤️ ScrapingAnt

Should we use an HTML parsing library with HtmlUnit? You probably wouldn't, as it already stuffed with DOM manipulation functionality. For example, you can use XPath selectors, get elements by id, and many more.

So, for retrieving our div text, we can use the getHtmlElementById method of the page instance.

final HtmlPage page = client.getPage("https://kami4ka.github.io/dynamic-website-example/");

final DomElement element = page.getElementById("test");

System.out.println(element.asNormalizedText());

I'd recommend HtmlUnit for everyone who started a Java web scraping.

Playwright: Chrome, Firefox and Webkit web scraping

Meet Playwright - cross-language library to control Chrome, Firefox, and Webkit. I've called this library a Puppeteer's successor in numerous previous articles, but it's a real competitor for Selenium with the current state of supported programming languages.

The Playwright's API is simple and provides the ability to control the most popular browsers. Also, it will be effortless to migrate your code from NodeJS with Puppeteer to Java with Playwright, as the API is similar.

To run Playwright simply add following dependency to your Maven project:

<dependency>

<groupId>com.microsoft.playwright</groupId>

<artifactId>playwright</artifactId>

<version>1.12.0</version>

</dependency>

And that's all. You don't need to be worried about the browser dependencies, as Playwright will handle it.

Let's proceed with our simple dynamic web page scraper using Playwright:

import com.microsoft.playwright.*;

public class ExampleDynamicScraper {

public static void main(String[] args) {

try (Playwright playwright = Playwright.create()) {

final BrowserType chromium = playwright.chromium();

final Browser browser = chromium.launch();

final Page page = browser.newPage();

page.navigate("https://kami4ka.github.io/dynamic-website-example/");

final ElementHandle contentElement = page.querySelector("[id=test]");

System.out.println(contentElement.innerText());

browser.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

The output will be expected one:

I ❤️ ScrapingAnt

I'm a big fan of Playwright, as this library allows me to simplify and unify my codebase for using Java. Also, it's a production-grade library maintained by Microsoft, so all the possible Github issues close pretty fast.

If you are still using Selenium, I'd recommend trying Playwright for new projects.

Web Scraping API: cloud-based headless browser

Web Scraping API is the simplest way of using a headless browser, rotating proxies, and Cloudflare avoidance without handling them.

It's a service that runs a whole headless Chrome cluster that is connected to a large proxy pool. It allows you to scrape numerous web pages in parallel without dealing with performance issues, as the browsers run in the cloud.

Let's have a look at web scraping API integration:

import com.google.gson.Gson;

import okhttp3.OkHttpClient;

import okhttp3.Request;

import okhttp3.Response;

import java.net.URLEncoder;

import java.nio.charset.StandardCharsets;

public class ExampleDynamicScraper {

public static void main(String[] args) throws Exception {

ExampleDynamicScraper example = new ExampleDynamicScraper();

String targetURL = "https://kami4ka.github.io/dynamic-website-example/";

// Get ScrapingAnt response

ScrapingAntResponse response = example.getContent(targetURL);

System.out.println(response.content);

}

// Make API request to ScrapingAnt

// ScrapingAntResponse is just a POJO with 'content' property

// Don't forget to use your API token from ScrapingAnt dashboard

private ScrapingAntResponse getContent(final String url) throws Exception {

final Gson gson = new Gson();

final OkHttpClient client = new OkHttpClient.Builder().build();

final String baseUrl = "https://api.scrapingant.com/v1/general?url=";

final String encodedTarget = URLEncoder.encode(url, StandardCharsets.UTF_8);

final Request request = new Request.Builder()

.addHeader("x-api-key", "<YOUR_SCRAPING_ANT_API_TOKEN>")

.url(baseUrl + encodedTarget)

.build();

try (final Response response = client.newCall(request).execute()) {

return gson.fromJson(response.body().string(), ScrapingAntResponse.class);

}

}

}

The code snippet looks more extensive than the previous, as it mainly handles making an HTTP call to ScrapingAnt server.

For obtaining your API token, please, log in to the ScrapingAnt dashboard. It's free for personal use.

The idea behind it is to specify the API token while making a call with OkHttpClient and parse JSON response using Gson. The result will contain already rendered HTML, which is ready for the less CPU-consuming operation - parsing.

What are the benefits of using a web scraping API?

- It's free for personal use

- Thread-safe, as it only requires dealing with HTTP calls

- Unlimited concurrent requests

- Cloudflare avoidance out-of-the-box

- Cheaper than handling your proxy pool

Conclusion

The Java Web Scraping topic is enormous, so it's hard to cover all the extensive parts like the proxies, multithreading, deployment, etc., in one article. Still, I hope that this reading helps get the first steps in web scraping and structuring data extraction information. The described knowledge is a bare minimum for creating a fully featured web scraper.

Also, I've intentionally skipped Selenium from the article, as it is not the most straightforward library from my sight. Still, if you're interested in Selenium web scraping, don't hesitate to contact me. Such a topic requires a separate extensive article.

I suggest continuing with the following links to learn more:

- HTML Parsing Libraries - Java - HTML parsing libraries overview

- Web Scraping with Javascript (NodeJS) - to learn more about web scraping with Javascript

- How to download a file with Playwright? - downloading files with Playwright (Javascript)

- How to submit a form with Playwright? - submitting forms with Playwright (Javascript)

Happy Web Scraping, and don't forget to keep your dependencies up-to-date 🆕